Bulletproof Event Delivery: How We Solved Data Inconsistency in BlinkFlow Using the Transactional Outbox Pattern

Building event-driven systems sounds straightforward until you hit the reliability wall. The nightmare scenario? Your data and events drift out of sync, leaving your system in an inconsistent state that's nearly impossible to recover from.

• The Consistency Problem That Keeps Developers Awake:

Picture this common workflow: save data to your database, then publish an event to your message broker. Simple, right? Wrong. This seemingly innocent two-step dance can fail spectacularly:

Scenario A: Database write succeeds, message publishing fails

Scenario B: Message gets published, database transaction rolls back

Both outcomes create data inconsistency—a silent killer that corrupts your entire system's integrity.

• Why This Mattered for BlinkFlow:

We encountered this exact challenge while building BlinkFlow, our automation platform that connects applications like Zapier does. Since BlinkFlow orchestrates workflows across multiple microservices using event streams, losing even a single trigger could break entire user automations.

Our backend architecture consists of four core services:

Primary Backend — handles authentication and workflow management

Flowrun Listener — captures incoming webhook triggers and logs them

Flowrun Producer — processes logged triggers and routes them to Kafka

Flowrun Executor — consumes Kafka messages and executes user actions

The critical bottleneck lived in our Flowrun Listener: every incoming trigger needed to be both persisted in our database and forwarded to Kafka. Treating these as separate operations opened the door to catastrophic data loss.

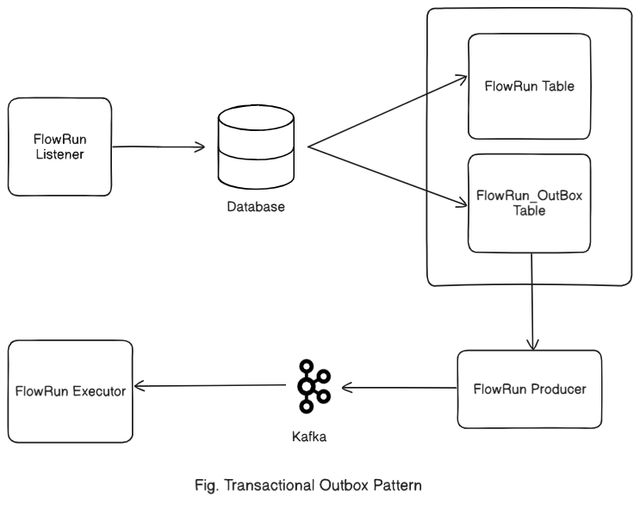

• The Transactional Outbox Pattern—Our Solution:

This pattern elegantly solves the dual-write problem through a staging approach:

Step 1: Instead of publishing directly to your message broker, write events to a dedicated outbox table within the same database transaction as your main data

Step 2: A background process reads from this outbox table and publishes events to the message broker

Step 3: Successfully published events are marked as processed in the outbox

This approach guarantees that your database state and message broker remain perfectly synchronized.

• Implementation in BlinkFlow:

Phase 1: Secure Event Capture (Flowrun Listener)—

When webhooks arrive, our listener performs atomic dual writes:

Records the trigger in the flowrun table

Simultaneously creates an entry in the flowrun_outbox staging table

Both operations execute within a single database transaction

Result: Either both writes succeed completely, or neither happens at all—no partial states possible.

Phase 2: Reliable Event Publishing (Flowrun Producer)—

Our producer service continuously:

Scans the flowrun_outbox table for unprocessed events

Publishes these events to Kafka

Marks records as processed only after confirmed delivery

Phase 3: Action Execution (Flowrun Executor)—

The executor consumes Kafka messages and triggers user-defined actions like API calls, email sends, or data storage operations.

• The Transformative Benefits:

Absolute Reliability: Events never vanish, even during Kafka outages or network failures

Clean Separation: Our listener remains completely decoupled from Kafka infrastructure

Independent Scaling: We can tune producer throughput without affecting other services

Fault Tolerance: Temporary Kafka unavailability just queues events in the outbox until recovery

• Hard-Won Insights:

Polling Optimization: Balancing database load against processing latency required careful tuning

Duplicate Handling: Building idempotent executors became essential since message duplication can still occur

Storage Management: Implementing automated outbox cleanup prevented unbounded table growth

• The Bottom Line:

For BlinkFlow, the Transactional Outbox Pattern evolved from an architectural nicety to an operational requirement. It delivered the ironclad guarantee that user triggers would flow reliably through our entire execution pipeline without data corruption.

If your architecture bridges databases and message brokers, don't gamble with partial failures. The Transactional Outbox Pattern offers a battle-tested, straightforward solution that's proven its worth in production systems worldwide.

The pattern is simple to implement, but its impact on system reliability is profound.